Take a simple equation such as y = 2x. You can transform that into the equation 2x – y = 0, and then could write it as f(x,y) = 2x – y = 0.

Now we have some function of x and y that equals zero. If we plug in numbers that are valid points in the original equation y = 2x (in other words, coordinates where y is double x), we’ll get zero out as a result:

f(x,y) = 2x - y f(1,2) = 2 * 1 - 2 = 0 f(2,4) = 2 * 2 - 4 = 0 f(0.5, 1.0) = 2 * 0.5 - 1.0 = 0

If we plug numbers in that don’t fit that pattern, we get non zero answers as a result:

f(x,y) = 2x - y f(2,1) = 2 * 2 - 1 = 3 f(5,5) = 2 * 5 - 5 = 5 f(-10, 20) = 2 * -10 - 20 = -40

What do these numbers mean? Are they useful for anything? As it turns out, they do have meaning and they are useful! They are part of what is needed to calculate the distance from the point (x,y) to the closest point on the curve. The other part of what we need is called the “gradient vector”.

Tangent Vector & Gradient Vector

As you probably know, there is a tangent vector at every point on a curve (let’s ignore things like asymptotes for now), and the tangent vector basically points from one point on the curve to the next point on the curve. The tangent vector can also be seen as a slope of that point on the curve.

A gradient vector is another property of a point, but it is different than a tangent vector in a couple ways. First off, it is perpendicular to the tangent vector, so sticks out away from the curve, and could be seen as a normal vector. Secondly, every point in the graph has a gradient vector, even if that point isn’t part of the curve and is just floating by itself in empty space.

The interesting part here is that a gradient vector of a point not on the curve will actually lead you straight to the closest point on the curve to the point. This is because of a handy property where the line connecting a point to the closest point on the curve will be perpendicular to the curve (perpendicular to the tangent).

So, if we can calculate a gradient vector for a point, we have the vector that points towards the closest point on the curve. Technically it might be pointing away from the closest point on the curve, instead of towards it but that gets cleared up in the math.

Calculating Gradient Vector

The gradient vector is just a partial derivative for each of the variables of the equation. If you have no idea what I’m talking about, here are 2 options:

1) You can watch some videos and learn about it here: Khan Academy: Multivariable calculus

2) You can have wolfram alpha calculate it for you, by typing “gradient” before the function. Click this link to see what I mean: Wolfram Alpha: gradient x^2-y

Here are some example equations, along with their gradient vector, to make sure that you either understand what’s going on, or are properly entering your equations into wolfram alpha. Note that a gradient vector may not always be constant, in which case, it changes value for each point! If it is constant, it’s the same gradient vector across the entire graph space.

line:

f(x,y) = 2x – y

gradient(x,y) = (2, -1)

cubic function:

f(x,y) = x^3 – 10y

gradient(x,y) = (3x^2, -10)

sine:

f(x,y) = sin(x) – y

gradient(x,y) = (cos(x), -1)

circle:

f(x,y) = x^2 + y^2 – 5

gradient(x,y) = (2x, 2y)

Calculating Distance

Ok, so now that we have the value of our equation at some point (x,y) and we have the ability to find our gradient vector at that point, we have everything we need to calculate the distance from the point to the closest point on the curve.

All you need to do is divide the absolute value of the equation value at that point by the length of the gradient vector at that point.

Example 1 – Line

Let’s start with something super simple… y = x, aka x – y = 0 aka f(x,y) = x – y.

the gradient of that function is (1, -1) at every point on the graph. The magnitude of the gradient vector is the square root of 2.

What is the distance from the line to point P(2,0)?

Well.. the value of the equation at that point f(2,0) is 2, and the absolute value of that is still 2.

Next we divide that value by the magnitude of the gradient to get 2 / square root(2), which is just square root (2). So, the distance is square root of 2.

Since the value of the function at that point was positive before taking an absolute value, that means that the gradient points from the line to the point, so you can flip the gradient around to get (-1,1), and that is the direction that the closest point on the line is from the point P(2,0). If we travel square root of 2 units from P(2,0) down the vector (-1,1) we will get to the point (1,1) which is on the graph. That is the closest point on the graph to our point.

Note that it works out in this case, but usually the gradient vector won’t be the right magnitude since it’s not normalized. It happened that in this case it is, by dumb luck, but it usually isn’t.

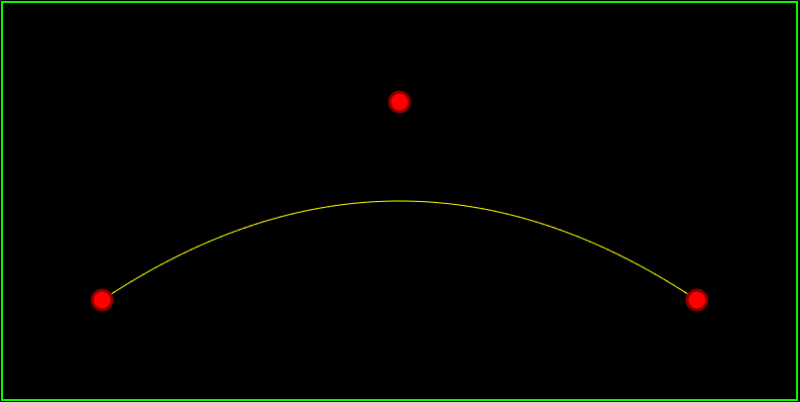

Example 2 – Another Line

Let’s try a more complex line. y = 2x aka 2x – y = 0 aka f(x,y) = 2x – y.

the gradient of that function is (2, -1) with a magnitude of square root of 5 at all points on the graph.

What is the distance from that line to point P(1,5)?

Well, the value of the function at (1,5) is -3. When you take the absolute value of that, it becomes 3.

So, the distance is 3 / square root (5).

In this case, since the value of the function at the point was negative before we took the absolute value, it means that the gradient points from the point to the graph, so the line y=2x is 3/square root(5) units of length away from the point P(1,5) and the direction to travel from the point to get to the line is the same direction as the gradient vector at that point aka (2,1).

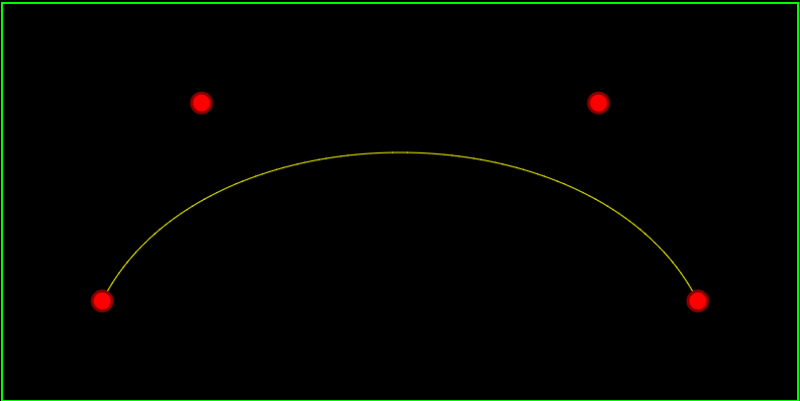

Example 3 – Quadratic Function

For the last example lets do y=x^2 aka x^2 – y = 0 aka f(x,y) = x^2 – y.

The gradient of that function is (2x,-1) and since it isn’t constant at all points, we can’t get the magnitude of the gradient yet.

What is the distance from that function to point P(2,0)?

Well, the value of f(2,0) is 4, which is still 4 if you take the absolute value.

The gradient at P(2,0) is (4,-1) with a magnitude of square root of 5.

So, the distance from point P(2,0) to the curve f(x,y) = x^2 – y is 4/square root(5) and to get from the point to the curve you should travel that distance down the same direction as the vector (4,-1).

Caveat

Ok ok… the other shoe dropping here is that this distance calculation is only a distance ESTIMATE, and not always the right answer.

This is because if the gradient is a constant or linear function, it will be correct, but higher order gradients don’t always follow straight lines from the point to the curve.

The good news though is that this estimation will be less than or equal to the actual distance. Maybe not as useful as a for sure distance calculation, but an upper bound estimate still has it’s uses (like in ray marching, or drawing vector graphics).

Basically, the straight line tells you the closest it could possibly be, but the actual gradient may take a longer, curving path, from the point to the curve.

More Info

Here’s the article that got me started down this path, trying to find the answer to this question, while trying to understand the main topic:

IQ: Distance Estimation

And here’s a link that really helped me understand what a gradient was, and what it was all about:

Vector Calculus: Understanding the Gradient

Khan academy videos about these topics:

Khan Academy: Multivariable calculus